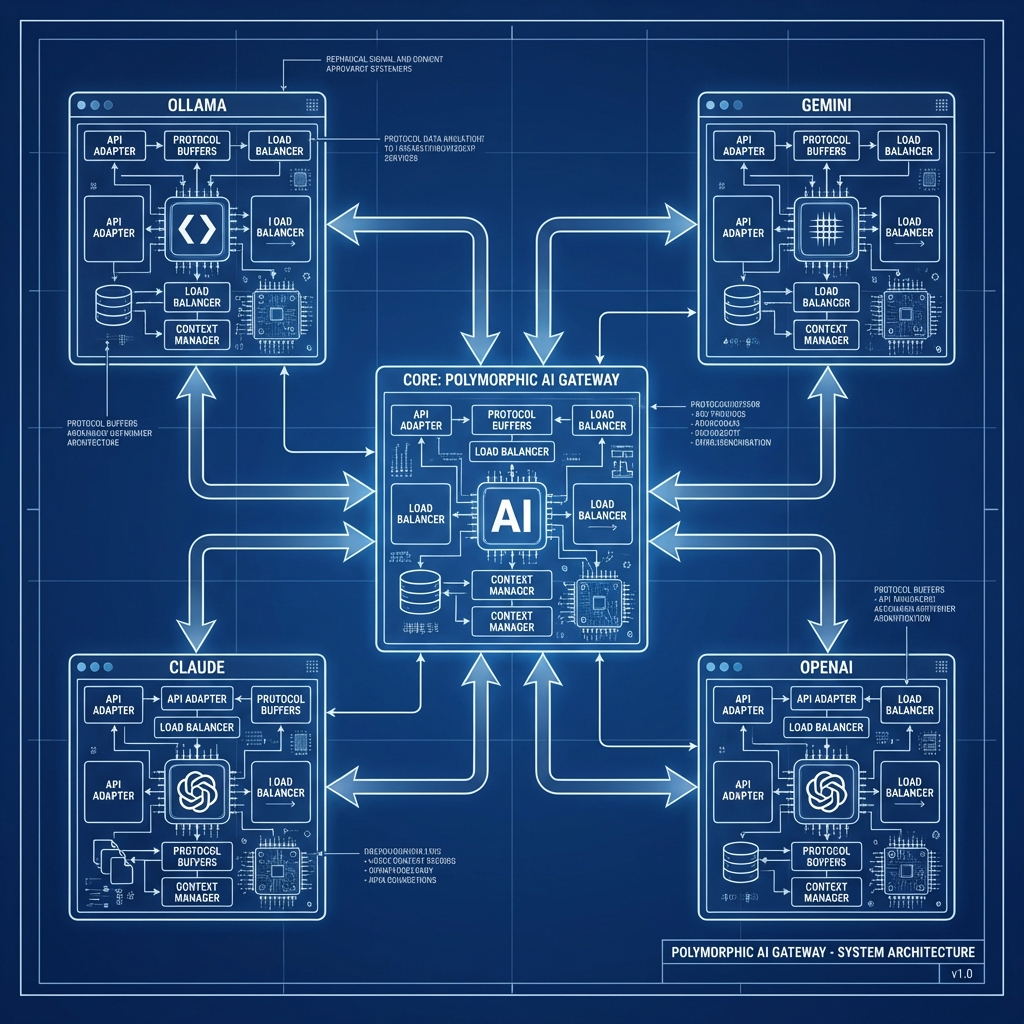

The challenge of building an “AI Gateway” isn’t connecting to APIs. It’s connecting to everything else. When we designed switchAILocal, we didn’t just want another proxy for OpenAI. We wanted a “Universal Adapter” that could handle the chaos of the real world: local binaries, proprietary protocols, and cloud endpoints.

The result is the Polymorphic Executor Pattern.

The Core Abstraction

To the outside world (your IDE), switchAILocal looks like a simple HTTP server. But inside, it’s a shape-shifting engine. It relies on a single Go interface:

type Executor interface {

Execute(ctx context.Context, request *Request) (*Response, error)

}

This simple contract allows us to implement widely different behaviors that share the same API surface.

The Three Modes of Execution

This polymorphism allows switchAILocal to support three distinct “species” of AI providers simultaneously:

1. Network Executors (The Standard)

- What they do: Make HTTP/SSE requests to a remote server.

- Examples:

ollama:,openai:,lmstudio:. - Use Case: Connecting to a standard local inference server or a public cloud API.

2. Process Executors (The Unique)

- What they do: Spawn a local subprocess and manage

stdin/stdout. - Examples:

geminicli:,claudecli:,vibe:. - Use Case: This is our unique “No-Key” solution. By wrapping the CLI tool directly, we leverage the user’s existing authenticated session (stored in the system keychain) without ever handling secrets in the gateway configuration.

3. Bridge Executors (The Future)

- What they do: Establish encrypted tunnels to remote agents.

- Examples:

opencode:. - Use Case: Connecting to complex agentic coding environments.

The Translation Pipeline: A Universal “Babel Fish”

Supporting these diverse backends creates a data problem.

- Ollama speaks HTTP JSON.

- Gemini CLI speaks command-line arguments.

- Cursor speaks OpenAI JSON.

To solve this, we built a Translation Pipeline that acts as a universal interpreter.

- Ingest: Takes the standard OpenAI request from your client.

- Normalize: Converts it to an internal canonical schema.

- Adapt: Transforms it for the specific target.

- For Ollama: Remaps the JSON to match the Ollama API.

- For CLI: Converts the JSON prompt into shell arguments (e.g.,

gemini prompt "...").

Resilience by Design

Because this system is polymorphic, it allows for Interchangeable Failover.

If geminicli: (a Process Executor) fails, the router can instantly retry the request on gemini-api: (a Network Executor). Use whatever works.

This architecture is what makes switchAILocal the most robust foundation for local AI development.

Sebastian Schkudlara

Sebastian Schkudlara

The Perfect AI Setup: Unifying Ollama, CLIs, and Apps

The Perfect AI Setup: Unifying Ollama, CLIs, and Apps