If you’ve ever stared at a terminal trying to remember if the flag is --agent-name, --name, or just -n, you know the pain. And if you’ve ever wished you could just tell your CLI what you want instead of hunting through man pages—this one’s for you.

Enter Traylinx Cortex: a new “brain” layer for the Traylinx CLI that lets you manage AI agents, P2P networks, and distributed systems using plain, everyday English.

No syntax gymnastics. No searching for docs. Just talk to your terminal like you’d talk to a teammate.

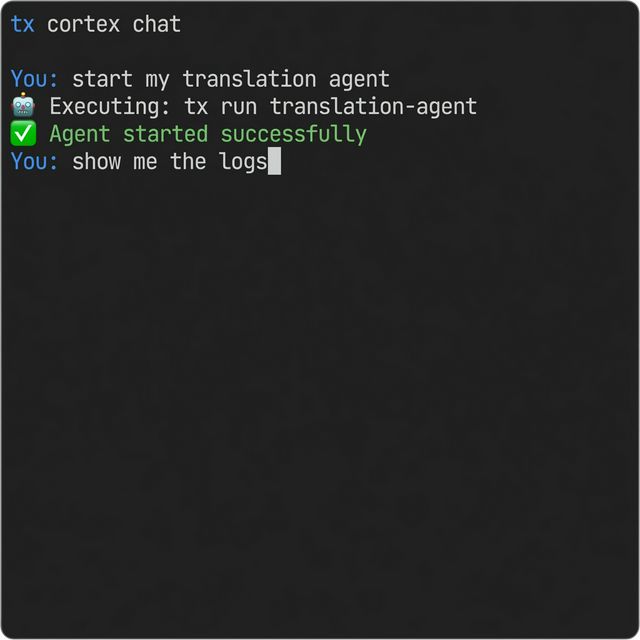

$ tx cortex chat

You: start my translation agent

🤖 Executing: tx run translation-agent

✅ Agent started successfully

You: show me the logs

🤖 Executing: tx logs translation-agent

[2026-02-05 10:30:15] INFO: Agent initialized

[2026-02-05 10:30:16] INFO: Listening on port 8080

You: find agents that can search the web

🤖 Executing: tx discover --capability search

Found 3 agents...

That’s the gist of it. Natural language goes in, valid CLI commands come out. But getting there required some interesting engineering under the hood.

The Problem: CLIs Are Powerful, But They Don’t speak Human

Command-line interfaces are the backbone of our work. They’re fast, scriptable, and give us precise control. But they also have a steep learning curve.

- Syntax overload: Every tool has its own quirks for flags and arguments.

- Context switching: Constantly alt-tabbing between your terminal and documentation breaks your flow.

- Cognitive friction: Even for tools we use daily, we forget the details.

For a system like Traylinx—which handles AI agents, P2P networking, auth, memory, and orchestration—the surface area is huge. You’ve got:

tx run/tx stop/tx logs(Lifecycle)tx discover/tx call(Networking)tx whoami/tx connect(Auth)tx cortex memory(Context)- And a whole lot more.

It’s a lot to keep in your head. Cortex solves this by meeting you where you are: in natural language.

What Cortex Actually Does

At its core, Cortex is a conversational interface layered on top of the Traylinx CLI. It:

- Listens to your plain English input.

- Classifies your intent (figures out what you want to do).

- Executes the right CLI command.

- Formats the output nicely.

- Remembers the context of your conversation.

Crucially, it’s not just a wrapper. It’s built with production-grade features that make it genuinely useful for real work:

1. Fast Reflexes + Deep Thinking

Cortex uses a two-tier system to figure out what you want:

-

Reflex Tier (Speed): For common, simple stuff like “start my agent,” it uses fast pattern matching. No LLM call needed, and it responds in under 50ms.

-

Cognitive Tier (Smarts): For complex requests like “Find offline agents that can translate documents,” it uses SwitchAILocal to reason about your intent.

This hybrid approach gives you the best of both worlds: speed when it’s simple, and intelligence when it gets complicated. And if the LLM is down? The pattern matching still works perfectly.

2. It Actually Remembers Context

Cortex isn’t a goldfish; it remembers what you were just talking about.

You: run my translation agent

🤖 Executing: tx run translation-agent

✅ Agent started

You: show its logs

🤖 Executing: tx logs translation-agent

[logs appear...]

You: stop it

🤖 Executing: tx stop translation-agent

✅ Agent stopped

See how “its” and “it” worked? Cortex tracks the active agent, the last command, your auth status, and more. This lets you have a natural, flowing conversation without constantly repeating names and IDs.

3. It Asks Disambiguating Questions

If you’re vague, Cortex doesn’t just crash. It asks for help.

You: show me the logs

❓ Which agent's logs would you like to view?

You: the translation one

🤖 Executing: tx logs translation-agent

This is huge for usability. It guides you to the right command instead of slapping you with an error code.

4. No Magic, Just Commands

We believe in transparency. You always see exactly what command is being run.

You: find agents that can search

🤖 Executing: tx discover --capability search

This builds trust—you know exactly what’s happening—and it helps you learn the CLI syntax simply by using it.

5. Safety First

For destructive stuff, Cortex puts up guardrails.

You: delete my agent

⚠️ About to execute: tx delete my-agent

This will permanently remove the agent and its data.

Continue? [y/N]

You can tweak what counts as “dangerous” in your config, but by default, it watches your back.

6. Persistent Sessions

Restart your terminal? No problem. Cortex saves your session history to disk (using SQLite + JSONL), so you can pick up right where you left off.

$ tx cortex chat

📂 Restored session from 2 hours ago

You: what was I doing earlier?

🤖 You were working with the translation-agent. You started it, viewed logs, and then stopped it.

Real-World Test: 96.3% Success Rate

We didn’t just ship this and hope for the best. We threw 27 comprehensive real-world tests at it—everything from fuzzy references (“that one”) to complex command chains.

Result: 96.3% pass rate.

The only hiccup? Ordinal references like “pick the first one” aren’t fully wired up yet (coming in the next patch). But the core functionality is rock solid.

Here’s a snippet from a live test:

$ tx cortex chat "what's my auth status?"

🤖 Executing: tx whoami

✅ Command completed successfully

Duration: 6427ms

Traylinx CLI

Email: sebastian.schkudlara@gmail.com

User ID: 9ff2839a-a3b8-4676-b550-f68bcf3e5186

Name: Sebastian Schkudlara

Token Expires: 2026-02-05 19:58:32 UTC

Natural language in. Structured output out. It just works.

Architecture: How It Works

For the curious developers, here’s the setup:

Component Interaction: Cortex sits as an orchestration layer. It has an Intent Classifier (Pattern + SwitchAI), a Command Executor (runs the actual subprocesses), and a Session Manager (SQLite based).

Key Modules:

- Intent Classifier: Extracts parameters and scores confidence.

- Command Executor: Handles the subprocesses and safety gates.

- Session Manager: Manages history and context accumulation.

- SwitchAI Client: Connects to our local LLM gateway for the “smart” parts.

- Conversation Layer: Handles the back-and-forth logic and state.

- Tool Calling: Exposes CLI commands as function definitions for the LLM.

SwitchAILocal Integration: We use SwitchAILocal to handle the reasoning. It’s smart enough to route to fast local models for quick classification, ensuring you’re not waiting on a cloud API just to list a directory.

Performance: Fast Enough to Feel Native

Speed is a feature. If Cortex feels sluggish, you won’t use it.

| Operation | Target | Actual | Status |

|---|---|---|---|

| Pattern matching | <50ms | ~20ms | ✅ |

| LLM classification | <2s | ~800ms | ✅ |

| Session load | <100ms | ~40ms | ✅ |

| Command execution | Real-time | Real-time | ✅ |

The secret sauce is Intent Caching. We cache frequently used intents. If you type “start my agent” five times, only the first one hits the LLM. The rest are instant (cached for an hour). Our hit rate in testing is over 80%.

Why This Matters for You

If you’re building on Traylinx, Cortex is a quality-of-life upgrade:

- Instant Onboarding: New devs can just ask “how do I deploy?” and get a working command.

- Focus: Stay in your terminal. No more context switching to the browser for docs.

- Automation: Cortex is scriptable.

tx cortex chat "do X"works in scripts too. - Learning: It teaches you the CLI as you use it.

The OpenClaw DNA

If you follow our OpenClaw work, you’ll spot some familiar DNA here.

- Persona System: Cortex uses a

PERSONA.mdto define its personality (helpful, precise, safety-conscious). - Workspace Bootstrap: It sets up

USER.mdandTOOLS.mdso you can customize its behavior without touching code. - Transcripts: Everything is stored in JSONL, making it easy to debug or analyze session history.

What’s Next?

Cortex v1.0 is ready for production, but we have big plans:

- Semantic Routing (Q1): Using vector embeddings to “fuzzy match” intents even better.

- Autonomous Orchestration (Q2): Multi-step workflows like “deploy, test, and notify me.”

- Team Collaboration (Q3): Shared sessions where you can “pass the terminal” to a teammate.

Give It a Spin

Cortex is part of Traylinx CLI v0.4.0+.

# Upgrade Traylinx CLI

pip install --upgrade traylinx-cli

# Start SwitchAILocal (for the brain)

switchai start

# Start chatting

tx cortex chat

We’d love to hear what you think. Drop us a line on GitHub or join the Discord.

TL;DR: Traylinx Cortex turns your CLI into a conversational partner. It understands what you mean, runs the right commands, and teaches you along the way. Stop memorizing, start talking.

$ tx cortex chat

You: let's build something

Sebastian Schkudlara

Sebastian Schkudlara

Google Drive Forge: An AI That Writes Its Own Skills

Google Drive Forge: An AI That Writes Its Own Skills