Traylinx Cortex: Giving Your AI a Persistent Memory (Finally!)

Let’s be honest: talking to most AI chatbots is like meeting someone for the first time, over and over again. You explain your project, give them context, and they help you out. Great! But close the tab, come back tomorrow, and… blank stare. “Hi! How can I help you today?”

It’s frustrating. It’s inefficient. And frankly, it’s not how intelligent partners should work.

That’s why we built Traylinx Cortex—the cognitive core of the Traylinx ecosystem. It’s designed to solve the “amnesic AI” problem once and for all, giving your agents a persistent, unified memory that spans across sessions and even different applications.

The Problem: Statelessness is a Feature (and a Bug)

LLMs (Large Language Models) are stateless by design. You send a prompt, they send a completion. They don’t “remember” anything. To create the illusion of a conversation, we have to send the entire chat history back to them with every new message.

This works fine for short chats. But for long-running projects? It’s a disaster.

- Context Window Limits: Eventually, you run out of space.

- Cost: You’re paying to re-process the same “Hi, my name is Sebastian” tokens thousands of times.

- Fragmentation: Your “Coding Agent” doesn’t know what you told your “Planning Agent.”

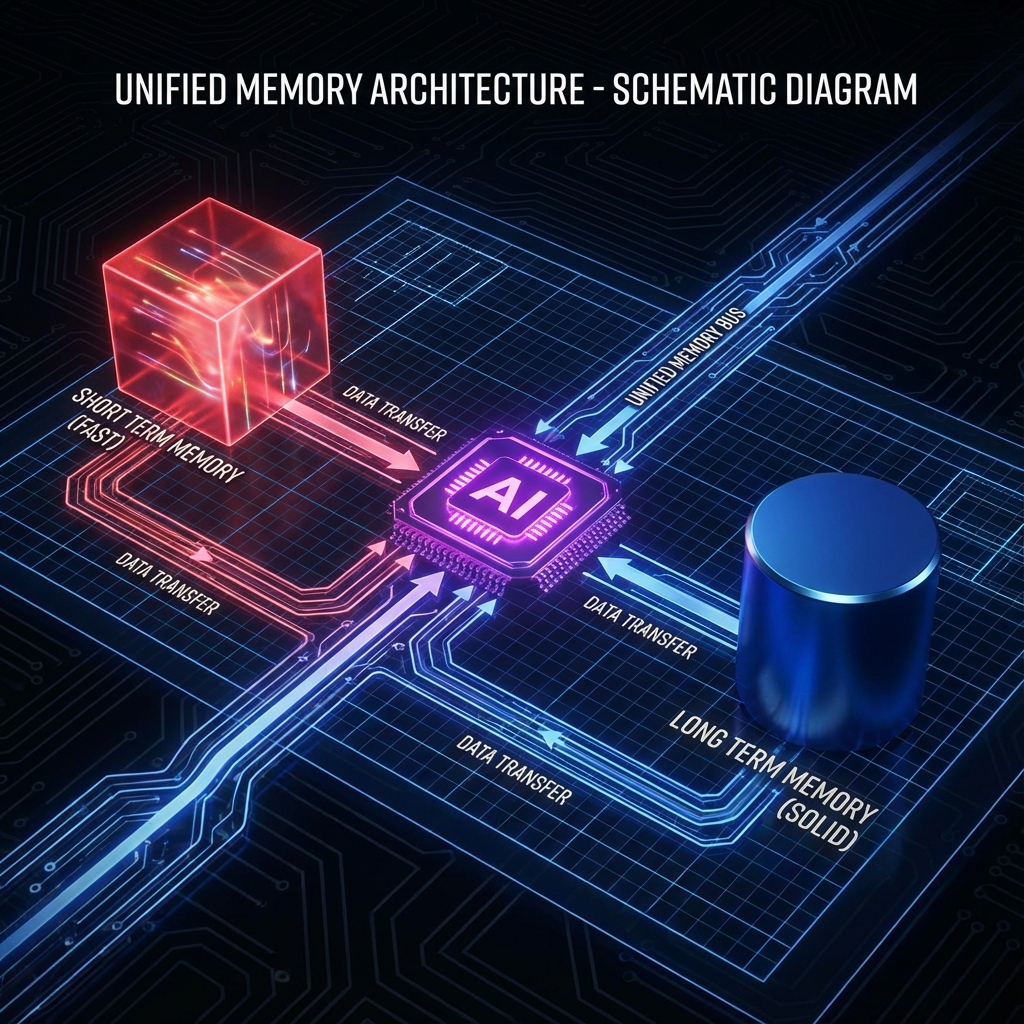

The Solution: Unified Memory Architecture

Traylinx Cortex introduces a Unified Memory system that mimics how human memory works: a blend of fast, fleeting short-term memory and deep, consolidated long-term memory.

1. Short-Term Memory (STM) - The “Working Bench”

- Technology: Redis (LIST data structure)

- Role: Keeps the immediate conversation flowing fast.

- Behavior: It holds the last ~20 messages or so. It’s blazing fast (sub-millisecond), ephemeral (expires after 24 hours of inactivity), and perfect for “what did I just say?” context.

2. Long-Term Memory (LTM) - The “Library”

- Technology: PostgreSQL +

pgvector - Role: Permanent storage of facts, preferences, and important details.

- Behavior: This is where the magic happens. A background process (we call it the “Consolidator”) constantly watches your conversation. When it spots a fact (“User is building a Ruby app”, “User prefers dark mode”), it extracts it, turns it into a vector embedding, and stores it forever.

How It Works: The Chat Flow

When you send a message to a Cortex-powered agent, a lot happens in the blink of an eye:

- The Handshake: Your request hits the API Gateway. Traylinx Sentinel checks your token (because security first!).

- The Scrub: Before the LLM sees anything, our PII Scrubber (powered by Microsoft Presidio) redacts sensitive info. “My phone number is 555-0199” becomes “My phone number is [PHONE_NUMBER]”.

- The Recall: Cortex queries the LTM: “What do we know about this user related to this message?” It retrieves relevant facts using vector similarity search.

- The Synthesis: The Orchestrator (built on LangGraph) combines your current message + STM context + retrieved LTM facts into a rich prompt.

- The Response: The LLM generates a response that feels surprisingly personal. “Here’s the Ruby code you asked for (since I know you’re working on that app).”

Why This Changes Everything

Imagine an ecosystem of agents that all share this brain.

You tell your Project Manager Agent: “We’re delaying the launch to December.” Later, you ask your Social Media Agent: “Draft a tweet about the launch.” Instead of suggesting “Launching next week!”, it says: “Get ready for our big reveal in December!”

It didn’t hallucinate. It remembered.

This is the power of Traylinx Cortex. It turns isolated chatbots into a cohesive, intelligent team that knows you, remembers your context, and gets smarter over time.

Ready to Upgrade Your AI’s Brain?

Cortex is open source and production-ready. You can spin it up with Docker in minutes.

git clone https://github.com/traylinx/traylinx_cortex

cd traylinx_cortex

docker-compose up -d

Stop settling for amnesic AI. Give your agents a cortex.

Next Up: In our next post, we’ll explore how these agents actually find each other using the Traylinx Router. Stay tuned!

Sebastian Schkudlara

Sebastian Schkudlara

A2A Ruby Gem: Agent-to-Agent Communication Made Simple

A2A Ruby Gem: Agent-to-Agent Communication Made Simple